There are times when you need to block certain websites’ access from the system. This could be your personal discipline to not waste time on addictive websites or it could be at your workplace where you are asked to do so. Or maybe you do not want someone to access few websites while using the computer. In either of the cases, this article will guide you through the best possible ways to block access to websites on a Linux system.

To block websites on Linux we can use many ways and tools but the two methods that you are going to learn about in this guide are the easiest.

- Using the ‘hosts’ file

- Squid

However, in this guide, I am going to focus on the first method. So let’s put the control of the internet in our hands and block some websites on your computer.

Blocking websites on Linux with ‘hosts’ file

Blocking a website with a ‘hosts’ file is pretty easy. You simply, open it up and redirect the websites to your localhost. When anyone tries to visit that website, your system automatically redirects it to a dead-end, showing an error of the website not getting found.

So to archive this, follow below instruction and block some websites on your system.

Firstly, open the ‘hosts’ file (Use any editor you like):

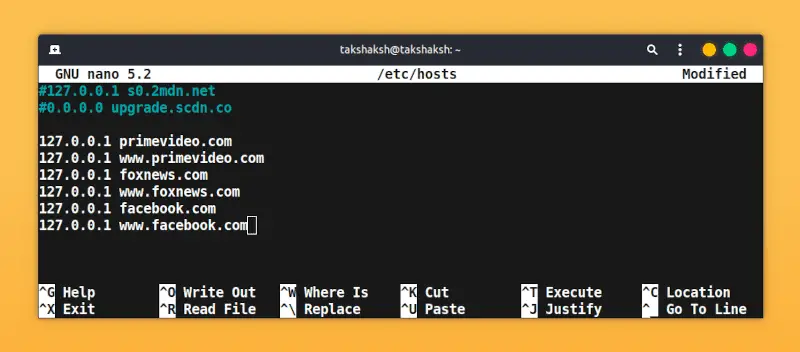

sudo nano /etc/hostsNow just add the domain names that you want to block and redirect them to a dead address, a good one can be your localhost address – 127.0.0.1.

After making changes to the ‘hosts’ file, reconnect to the internet and access to the mention websites will be blocked. Sometimes it can take few minutes to take effect.

And that is all you have to do to block any website from in your computer.

Also read: 10 facts about Linux that you should know

Watch Video guide on YouTube

Additionally, You can also watch a step-by-step video guide on YouTube to get a better understanding of it.

Conclusion

So that’s it on how to block websites on Linux. If you have anything to share then let me know what you think about it in the comments. You can also subscribe to the LinuxH2O Youtube channel. Till then, keep enjoying Linux.

Thanks

We used to do this at work with Squid a little over 20 years ago, during the tail-end of the dot-com boom, to stop people from surfing porn sites. Squid was remarkably effective, and efficient, in this role, far more so than Microsoft Proxy Server, which kept crashing all the time. We ran it for quite some time until someone in another department screamed about it (they were slavishly devoted to Microsoft and only Microsoft). So, it got taken down and things got worse again, much to the disappointment of the employees that I had to support. Furthermore, my manager, who had approved it, denied any knowledge of it after the firestorm started. But the experiment proved the point and thus I consider it successful.

To make web filtering effective, you have to make sure that whoever else is browsing doesn’t have root/admin access to whatever’s doing the filtering. In the case of a Squid server, that’s root on the Squid server. In the case of using /etc/hosts on the local workstation, that’s root on the local workstation.